Resolving the “PySpark GroupBy Cannot Pickle IO.BufferWriter” Error

When working with PySpark, one of the most common errors you might encounter involves pickling issues. A specific example of this is the "cannot pickle io.BufferWriter" error that can occur during a groupBy operation. This error can be frustrating for developers, especially when working with large datasets or complex transformations in PySpark. In this article, we’ll explore the causes of this error and how to resolve it effectively.

1. Understanding the PySpark GroupBy Operation

Before delving into the error, let’s briefly understand the groupBy operation in PySpark. This operation is used to group data based on one or more columns and apply aggregation functions such as sum(), count(), or avg().

For example:

This operation is distributed, meaning that PySpark will perform the group-by and aggregation in parallel across different worker nodes in the cluster. However, this distributed nature is the root cause of the error when pickling issues arise.

2. The “Cannot Pickle IO.BufferWriter” Error

When you run into the "cannot pickle io.BufferWriter" error, it typically happens during serialization. PySpark relies on the Python pickle module to serialize data when distributing tasks across worker nodes. However, the BufferWriter class, which is responsible for managing data writes in streams, cannot be pickled, leading to this error.

What Is Pickling?

Pickling is a process of serializing Python objects so they can be transmitted over the network or stored in files. The Python pickle module is used to perform this serialization. When PySpark tries to serialize and send functions or objects to worker nodes (via groupBy or similar operations), it encounters the problem when the object includes non-pickleable types like io.BufferWriter.

3. Common Causes of the Error

The "cannot pickle io.BufferWriter" error typically occurs under the following conditions:

- Custom Aggregation Functions: If you are using custom aggregation functions (e.g., UDFs), they might reference objects that are not pickleable, such as

io.BufferWriter. - Improper Function References: Sometimes, functions that work fine locally fail during parallel execution due to references to unpicklable objects.

- Incorrect Data Handling: If your DataFrame includes data that is linked to open file streams, network connections, or other non-pickleable objects, PySpark might fail to serialize the data for distributed processing.

4. How to Resolve the Issue

Here are some practical approaches to resolve the "cannot pickle io.BufferWriter" error:

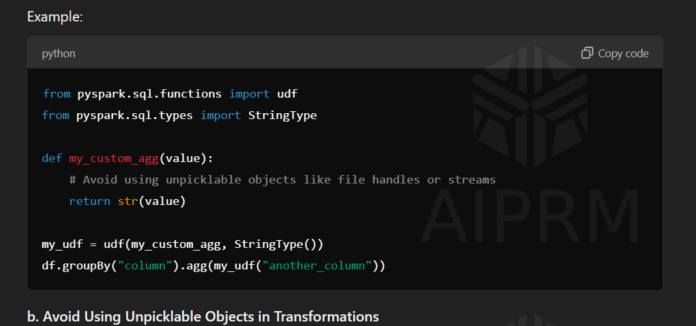

a. Check Your Custom Functions

If you are using custom aggregation functions, ensure they do not reference any non-pickleable objects. For instance, avoid using open() file handles or BufferWriter objects directly within your functions.

Example:

b. Avoid Using Unpicklable Objects in Transformations

Ensure that transformations on your DataFrame do not involve objects that are inherently unpicklable. For instance, objects that handle I/O operations (like file streams) or database connections should be avoided inside UDFs or other distributed tasks.

c. Using Broadcast Variables

If your operation involves a lookup or reference to a large dataset or a file that could lead to I/O-related pickling issues, consider using broadcast variables. Broadcast variables are distributed to all worker nodes, but they do not require serialization in the same way as regular variables.

Example:

d. Rewriting Aggregations

Instead of using custom UDFs, leverage built-in PySpark functions that are optimized and more likely to be pickleable. PySpark’s built-in aggregation functions (sum(), avg(), count(), etc.) are generally efficient and avoid the pickling issues associated with custom functions.

Example:

e. Using Arrow for Optimized Execution

Arrow is a framework for accelerating data transfer between Spark and Pandas, and it can help optimize PySpark operations, including groupBy. However, Arrow requires that the data being transferred is pickleable. Ensure that Arrow is enabled if you’re working with Pandas DataFrames.

Example:

f. Serialize Objects Explicitly

If you absolutely need to serialize complex objects, consider using the cloudpickle module, which is a more flexible version of pickle and can handle a wider range of Python objects.

Example:

5. Conclusion

The "cannot pickle io.BufferWriter" error in PySpark is often related to the use of non-pickleable objects during parallel operations, such as groupBy. By avoiding unpicklable objects in custom functions, leveraging broadcast variables, and utilizing PySpark’s optimized built-in functions, you can resolve this issue and ensure that your code runs smoothly across distributed worker nodes. Remember to avoid relying on objects that handle I/O operations, as these can cause serialization problems in PySpark’s distributed environment.